Abstract

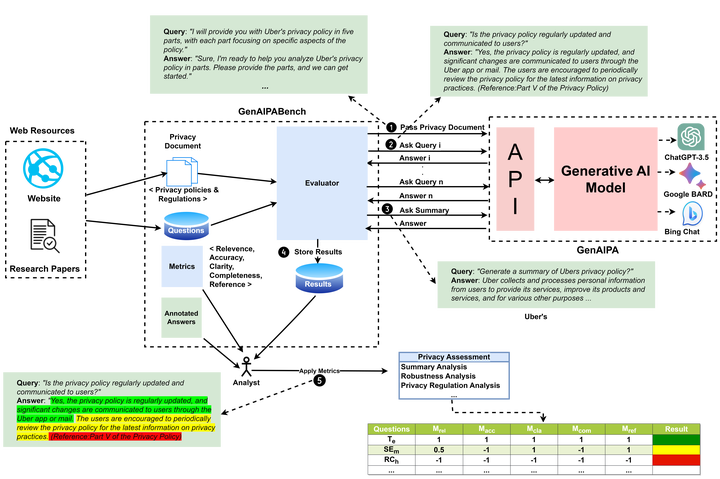

In the age of data-driven technology, privacy has emerged as a critical concern for both users and organizations. Privacy policies are widely used to outline the data management practices of a company. However, it has been demonstrated that privacy policies, as well as regulations, are generally too complex for people, which motivates the need for privacy assistants. The emergence of generative AI (genAI) technology presents an opportunity to improve the capabilities of privacy assistants to answer questions people might have about their privacy. However, while textual genAI technology generates content that seems accurate, it is been shown that it can generate fake/incorrect information (e.g., due to its tendency to hallucinate) which might mislead the user. This paper presents, GENAIPABENCH, a novel privacy benchmark to evaluate the performance of generative AI-based privacy assistants (GenAIPAs). GENAIPABENCH includes: 1) A comprehensive set of questions about an organization’s privacy policy along with annotated answers and data protection regulation questions; 2) A set of metrics to evaluate the answers obtained from the generative AI system; 3) An evaluation tool that generates appropriate prompts to introduce the system to the privacy document and different variations of the privacy questions to evaluate its robustness. We present an evaluation of OpenAI’s ChatGPT using GENAIPABENCH. Our findings indicate that ChatGPT holds considerable promise as a privacy agent, although it experiences challenges such as handling complex tasks, ensuring response consistency, and accurately referencing the information provided.